Key Genomic Technologies

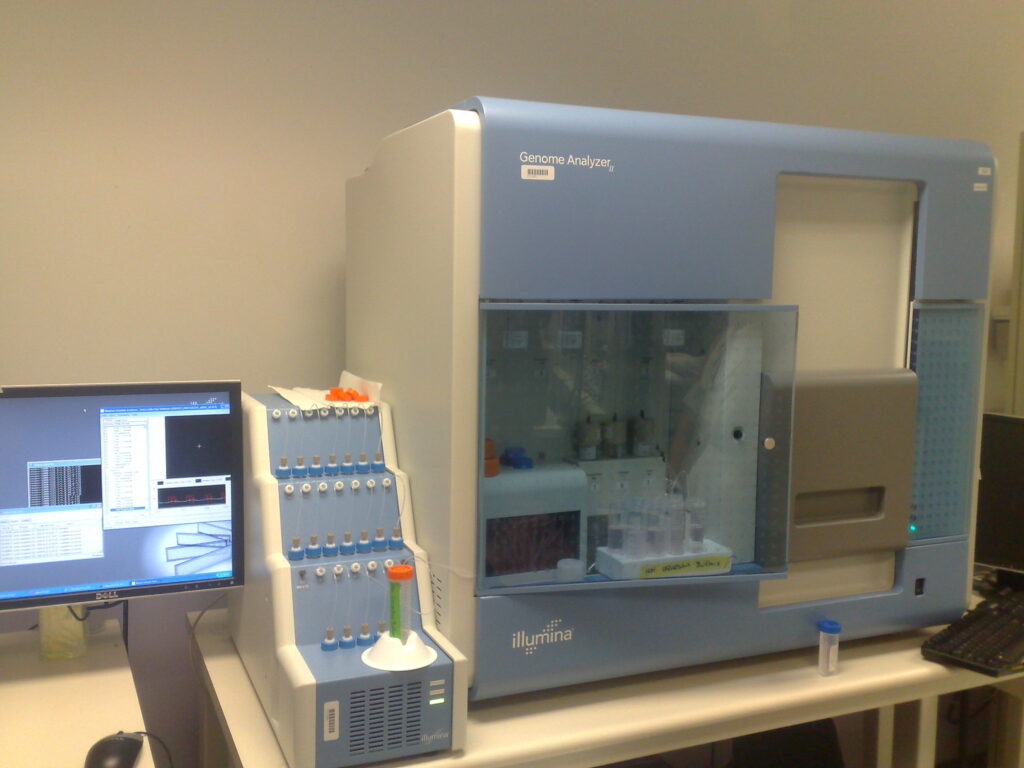

Next Generation Sequencing (NGS) technologies have revolutionized genomic studies by allowing rapid, cost-effective sequencing of DNA and RNA. For instance, Illumina’s sequencing platforms have been extensively used to perform whole-genome sequencing, metagenomics, and transcriptomics. A notable application is the sequencing of the Neanderthal genome, which provided insights into human evolution and our genetic overlap with Neanderthals, illustrating the potential of NGS in answering profound evolutionary questions.

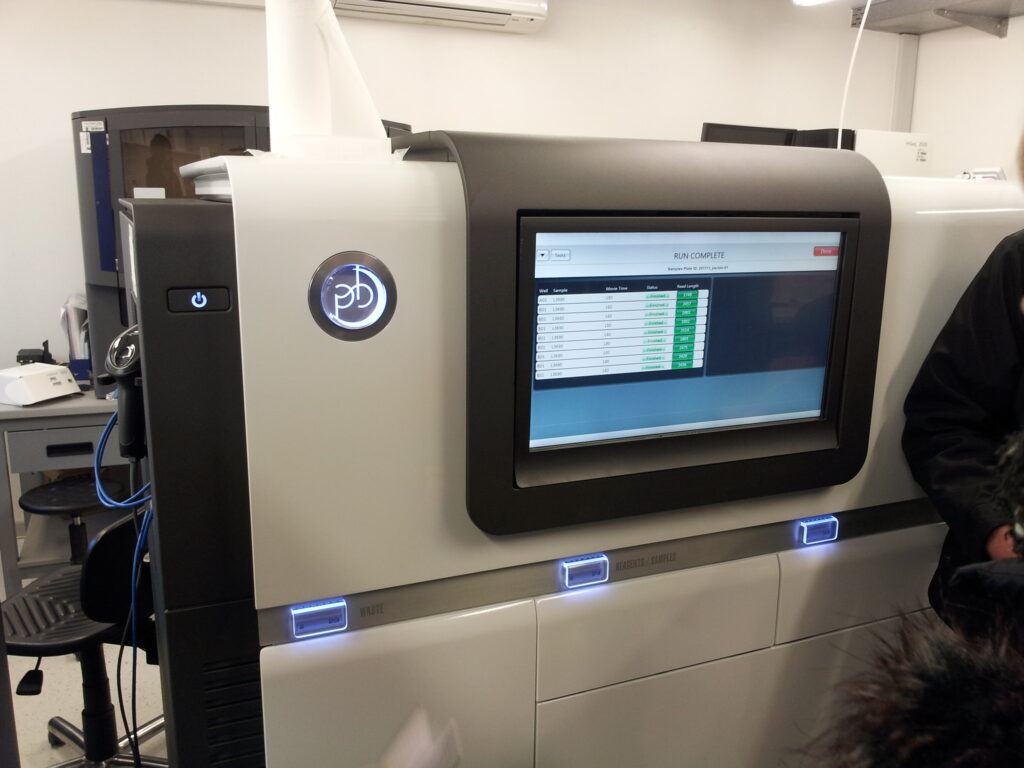

Third generation sequencing technologies such as PacBio’s Single Molecule, Real-Time (SMRT) sequencing and Oxford Nanopore’s platforms offer longer read lengths, which are beneficial for genome assembly, identification of structural variants, and epigenetic modifications. For example, the use of PacBio sequencing to assemble the complete genome of the Aedes aegypti mosquito has significantly advanced our understanding of the genetic factors that confer disease vector capabilities, critical for controlling vector-borne diseases.

High-throughput genotyping technologies like SNP chips and genotyping by sequencing (GBS) are crucial for assessing genetic variation across populations at a reduced cost. A practical application can be seen in agricultural ecology, where SNP chip technology has been used to enhance breeding programs for crops like rice and wheat by rapidly identifying and selecting for traits such as drought resistance and improved yield.

Challenges in Analyzing Genomic Data

An integral part of analyzing genomic data is a comprehensive understanding of advanced analytics (Bioinformatics) which is required for processing and analyzing the vast amounts of data generated. This includes tasks such as read alignment, genome assembly, variant calling, and functional annotation. For example, bioinformatics tools like BLAST for sequence alignment and QIIME for analyzing microbial communities have become staples in ecological genomics. The primary challenges include data storage, computational requirements for data processing, and the need for sophisticated algorithms to handle noise and errors in the data. Additionally, interpreting the biological significance of genomic data requires robust statistical models and machine learning techniques. A case in point is the Earth BioGenome Project, aiming to sequence all eukaryotic life forms. The project faces immense bioinformatics challenges in data integration, requiring the development of new tools and collaboration platforms.

Case Example: Earth BioGenome Project

The Earth BioGenome Project (EBP) is an ambitious initiative that aims to sequence and catalog the genomes of all of Earth’s eukaryotic biodiversity. Launched in 2018, the project seeks to provide a complete DNA sequence for each of the approximately 1.5 million known animal, plant, protozoan, and fungal species on Earth. This monumental task not only promises to revolutionize our understanding of biology and biodiversity but also presents significant challenges, particularly in the realm of bioinformatics. One of the foremost challenges is the sheer volume of data generated. Sequencing 1.5 million species means potentially petabytes of genomic data, encompassing a wide variety of genome sizes and complexities. For instance, the genome of a simple fungus may be relatively small and straightforward, while the genome of a complex flowering plant may be large and full of repetitive elements, which are difficult to sequence and assemble accurately. Integrating this vast amount of data from diverse organisms and multiple sequencing centers worldwide requires sophisticated data management strategies. The EBP must develop and maintain a standardized, open-access framework to ensure that genomic data, along with associated metadata (e.g., species information, geographical location, sample source), are accessible and usable. This integration is critical for comparative genomic analyses, which can reveal evolutionary relationships, genetic diversity, and functional genomics across the tree of life. Handling and processing this data also require immense computational resources. The bioinformatics pipeline for the EBP involves data storage, sequence assembly, annotation, and analysis, each of which demands significant computational power and efficient algorithms. The project therefore relies on cloud computing solutions and high-performance computing centers to manage the workflow.

Existing genomic analysis tools are often not scalable to the magnitude required by the EBP. There is a need for the development of new computational tools that can more efficiently process large-scale sequence data, accurately assemble genomes from complex and repetitive DNA regions, and perform in-depth comparative analyses across highly divergent species.

Media Attributions

- illumina © Jon Callas is licensed under a CC BY (Attribution) license

- © Konrad Förstner is licensed under a CC BY (Attribution) license